Architecture

The blazing fast numbers with respect to latency are coming due to a number of architecture desicisons in multiple facets and low level optimizations that are happening at the core library. In the following we are explaining the architecture design of Trio and dig into networking, setup steps, persistent storage, deployment of the entire architecture. None of the following is required to deploy the stack at your infrastructure but they act as learning the internals for curious backend devs.

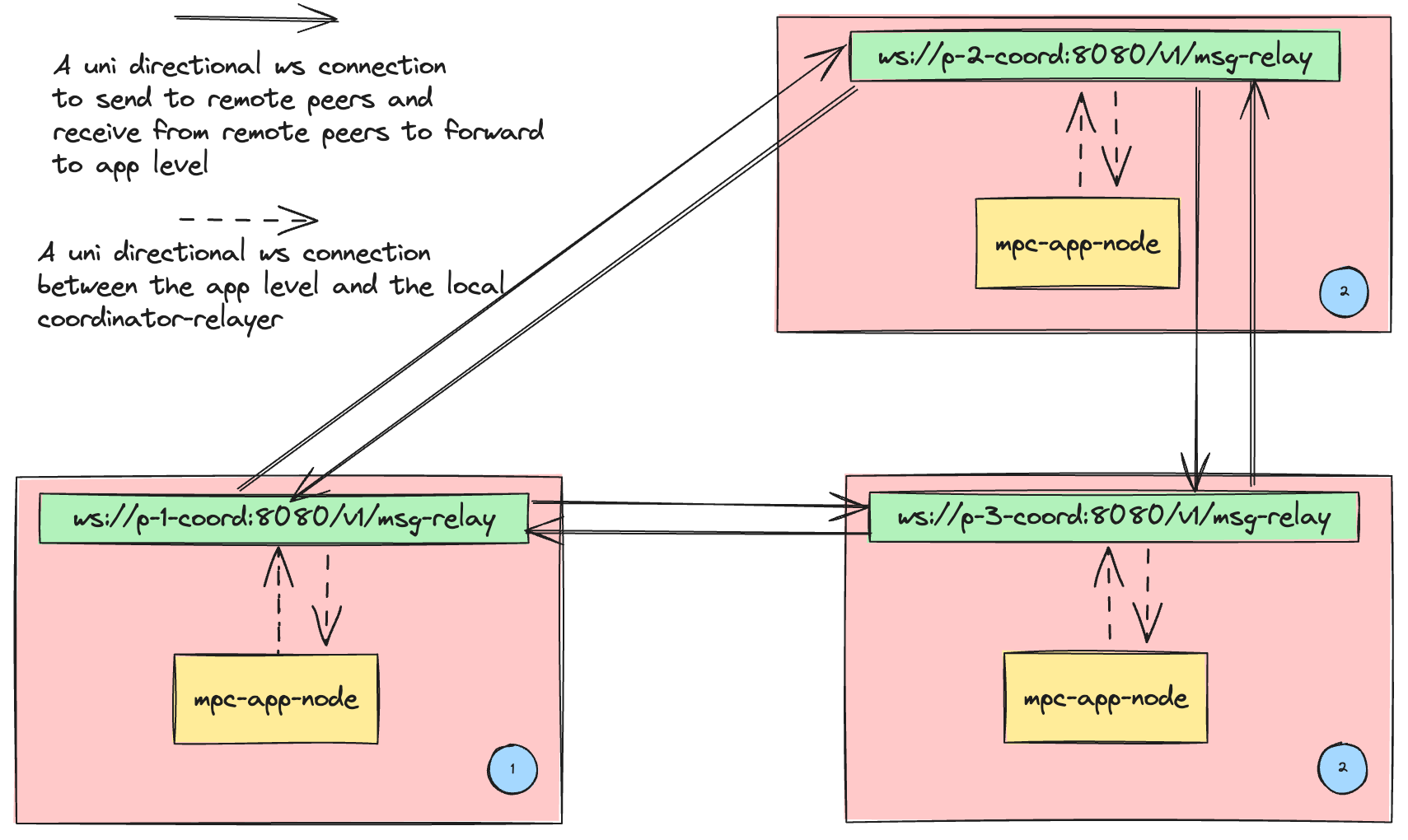

Network

Communication between nodes and client is happening through a relayer in a pull messaging mode: Everything is posted on the relayer and the receiver knows when and what to ask. That was a design decision that maps best the nature of MPC protocols whereby any mpc node depending on the protocol: keygen,keyrefresh,sign,presign,import,export,qc,ws knows what type of messages to expect and from where. As such there is no destination property if you go through the stack.

Connection pools

There are two level of connections in the stack

There are two level of connections in the stack

- At the application layer each mpc node is acting as a client to its local relayer with a local connection. The relayer at the mpc-app-node is usually embedded as an object in the requests (yellow green connections). When the local msg-relay is receiving a msg from its local host mpc-app-node it forwards the msg to the other relayers. When it receives a msg that has been asked from mpc-app-node it forwards the msg to the local mpc-app-node.

- Each relayer is exposed from ws//acronym:8080/v*/msg-relay and has a direct connection with each other relayer(green green connections) in the current setup. These are hardcoded information usually exposed in a docker compose config. The state machine for each relayer is described below.

Exposed crates

msg-relay-svc:- exposes as main an http server on localhost:8080 under

/v1/msg-relay - each message it receives from incoming connection it dispatches it and mark it inside

Inner.messages - messages are handled through

MsgRelayConnection::send_message(msg)::handle_message(tx,msg)

- exposes as main an http server on localhost:8080 under

msg-relay-client:a ws client communicating with the msg-relay-svcmsg-relay: Low level impl of the relayer object used inmsg-relay-svc

State Transition Logic

Messages are pilled inside Inner.messages hashmap with a MsgId as a key and MsgEntry as value. MsgEntry is an enum dictating the status of the received msg: Ask(waiters sender channel list),Ready(msg). The state machine logic when a relayer receives a msg is as follows in pseudocode:

MsgRelayConnection::send_message(msg)::handle_message(tx,msg):

if msg.msgId does not exist in messages

if msg.type == Ask

//mark the message as Asked and add the sender in the waiter list

messages.add(msg.msgId,MsgEntr(waiter(tx.id,tx tx)))

else

//mark the msg as reaedy to be read

messages.add(msg.msgId,MsgEntr(Ready(msg.bytes())))

else //there is msgId already in the hashmap

if msgId.type==Ask

//mark the message as Asked and add the sender in the waiter list

messages.add(msg.msgId,MsgEntr(waiter(tx.id,tx tx)))

else //a ready msg has been asked so it can be sent

tx.send(msg)

Workflow Example

- Create a relayer:

let s = SimpleMessageRelay::new(); - connect to a relayer from endpoint a:

a = s.connect(); - connect to the relayer from endpoint b:

b = s.connect(); - b wants to receive a msg with id = msgid from a:

b.send(msgid,ASK) - a sends the msg:

a.send(msgid,msg)

Basic Data Objects

pub struct MsgRelay{Inner};

pub struct MsgRelayConnection {

tx_id: u64,

tx: mpsc::UnboundedSender<Vec<u8>>,

rx: mpsc::UnboundedReceiver<Vec<u8>>,

inner: Arc<Mutex<Inner>>,

}

struct Inner {

conn_id: u64,

expire: BinaryHeap<Expire>,

messages: HashMap<MsgId, MsgEntry>,

total_size: u64,

total_count: u64,

on_ask_msg: Option<Box<OnAskMessage>>,

}

Naming-Bootstrapping

Setup msg: In a real world setup beyond the generic mpc terminology there is always a party with a special role which bootstraps the process and is called initiator who is fully trusted otherwise there will be no working functionality. The initiator creates the configuration file that set up the network. That setup message includes :

- the pk’s of all other nodes

- a random protocol identifier unique per protocol:

InstanceID - Her own verification key for the signature over the setup msg

- A signature over all the above

InstanceId-MessageId-KeyId

Each protocol uses the InstanceID from the initiator to create id’s for each message in the mpc protocols. Each msgId is a hash over:

InstanceIdsender(public key)tag

The KeyId identifies the keyshare as an index and is currently only internally set to the created pk which corresponds to the ecdsa-dkls shares.

Persistent Storage

The library has a generic train definition in order to allow plugable database drivers to be used as persistent storage plugins. Examples include google storage objects, plaintext disk storage and SQLite. The traits are generic and composable to be implemented for any underlying DB.

Deployment

- trio-c: All cloud nodes

- trio-h: Trio hybrind mode with 2 cloud nodes and one mobile or browser